Concurrency in Scala

How plain Scala approaches concurrency? Future “monad” is the answer (actor model was also part of Scala but got deprecated in Scala 2.10). Everyone used or use Scala Futures. People coming to Scala from Java are thrilled by the API it offers (comparing to Java Future). It is also quite fast, nicely composable. As a result Future is the first choice everywhere where the asynchronous operation is required. So it is used for both performing time consuming computations and to call external services. Everything that may happen in the future. It makes writing concurrent programs a lot easier.

Basic Future semantic

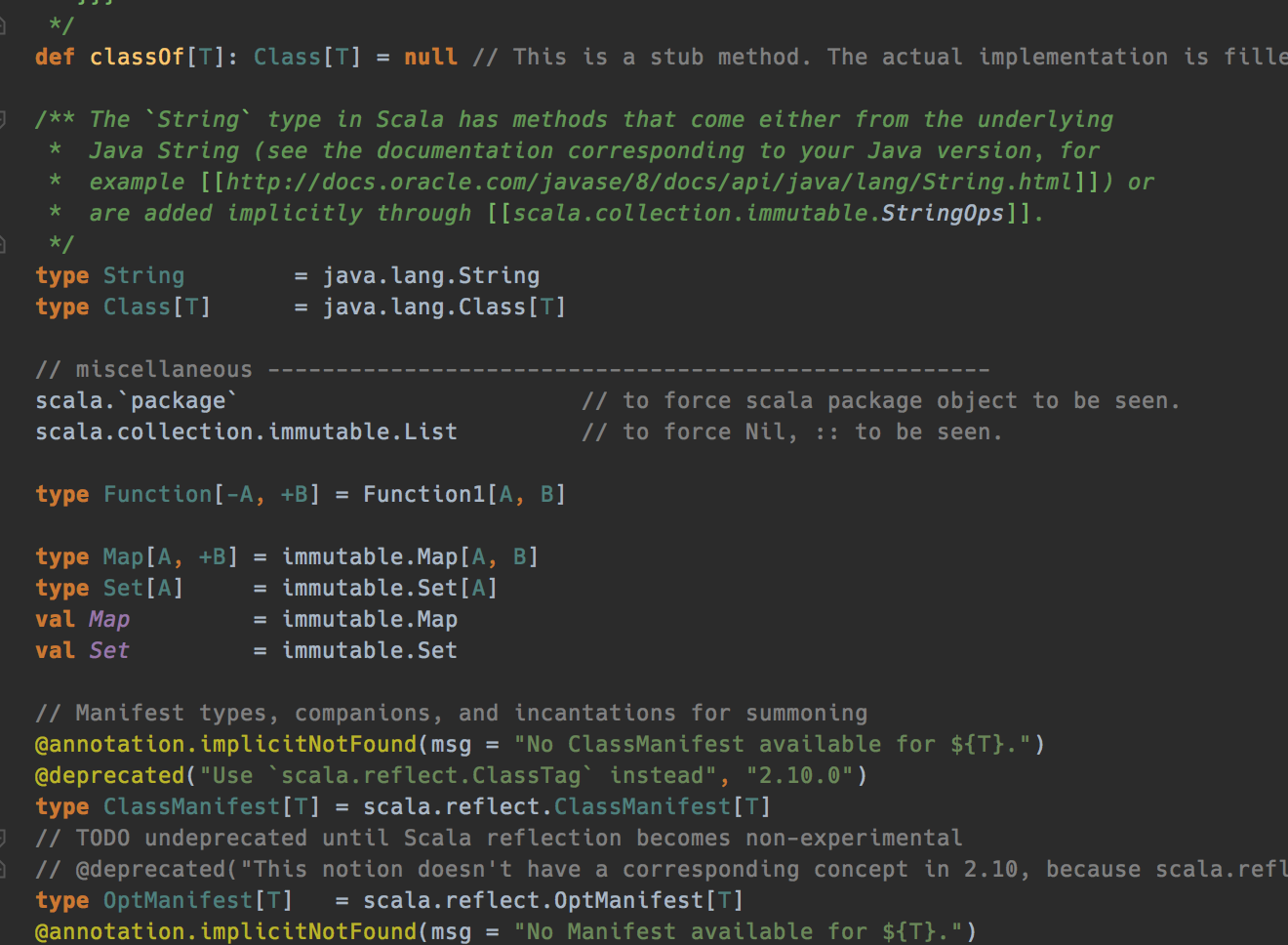

Scala’s Future[T], present in the scala.concurrent package, is a type that represents a computation that is expected to eventually result in a value of type T. The computation also might break or time out so completed future might be successful or failed with an exception. Alas, the computation might go wrong or time out, so when the future is completed, it may not have been successful after all, in which case it contains an exception instead.

Future vs functional programming

Let’s look at the Scala Future from the functional programming perspective. Technically it is a monad. However is Future really a monad?

What is monad exactly? Briefly speaking, a monad is a container defining at least two functions on type A:

- identity (unit) –

def unit[A](x: A): Future[A] - bind (flatMap) –

def bind[A, B](fa: Future[A])(f: A => Future[B]): Future[B]

Additionally these functions must satisfy three laws: left identity, right identity and associativity.

From the mathematical perspective monads only describe values.

Scala Future obviously follows all above so it can be called a monad. However, there is also another approach that says that Future used for wrapping side effects (like calling an external API) cannot be treated as monad. Why? Because when Future does it, it is no longer a value.

What is more, Future executes upon data construction. This makes it difficult to follow referential transparency which should allow substitution of the expression with its evaluated value.

Example:

def sideEffects = (

Future {println("side effect")},

Future {println("side effect")}

)

sideEffects

sideEffects

It produces the following output:

side effect side effect side effect side effect

Now if Future was a value we would be able to extract the common expression which is:

lazy val anEffect = Future{println("side effect")}

def sideEffects = (anEffect, anEffect)

And calling it like this should present the same results as in the previous example:

sideEffects sideEffects

But it does not, it prints:

side effect

The first call to sideEffects runs the future and caches the result. When the sideEffects is called second time the code inside Future is not called at all.

This behavior clearly breaks referential transparency. However, if it also makes the Future not a monad is far longer discussion so let’s leave it for now.

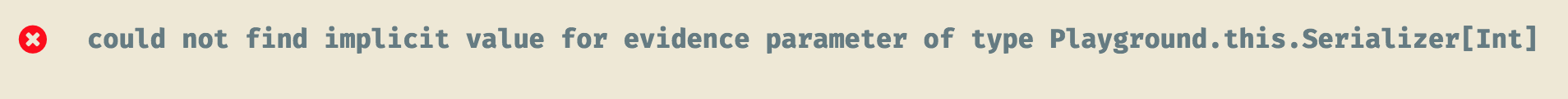

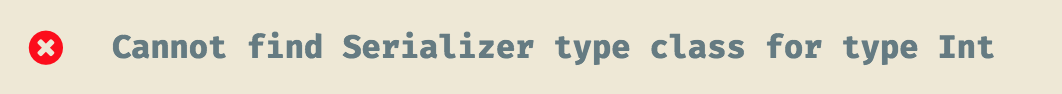

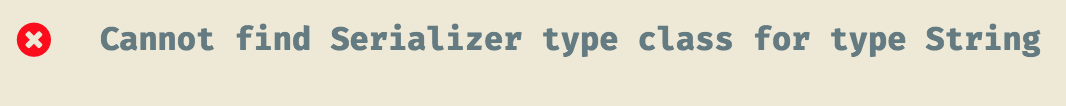

Another problem with Future is ExecutionContext. They are inseparable. Future (and it’s functions like map, foreach, etc.) needs to know where to execute.

def foreach[U](f: T => U)(implicit executor: ExecutionContext): Unit

By default scala.concurrent.ExecutionContext.Implicits.global execution context is imported everywhere the Future is used. On one hand it is a bad practice because the execution context is decided early and then it is fixed. It also makes it impossible for the callers of the function to decide on which ExecutionContext they want to run the function. Obviously it is possible to make the ExecutionContext the parameter of the function but then it propagates into all of the codebase. It needs to be added to whole stack of function calls. Boilerplate code. Preferably we want to decide on the context on which we execute the functions as late as possible, generally when the program starts the actual execution.

Future performance

Let’s look at the performance of the Scala Future. In the later chapter we will compare it to other constructs that I suggest as a replacement.

We will run two computations:

- eight concurrent and sequential operations computing trigonometric tangent of an angle and returning the sum of the values

- three concurrent and sequntial operations finding all prime numbers lower then n and producing a sum of them

The source code for the test can be found here: https://github.com/damianbl/scala-future-benchmark

The machine used to run this benchmark is an Intel(R) Core(TM) i9 2,4 GHz 8-Core with 32 GiB of memory running on macOS Catalina 10.15.2.

Results:

[info] Result "com.dblazejewski.benchmark.FutureMathTanBenchmark.futureForComprehensionTestCase": [info] 17049.404 ±(99.9%) 259.822 ns/op [Average] [info] (min, avg, max) = (16682.279, 17049.404, 18281.647), stdev = 346.855 [info] CI (99.9%): [16789.582, 17309.226] (assumes normal distribution)

What instead of Future?

When we now some of the limitations of the Scala Future let’s introduce a possible replacement.

In the last months there is a lot of hype around ZIO library.

At the first glance the ZIO looks really powerful. It provides an effect data types that are meant to be high performant (we will see this in the performance tests), functional, easily testable and resilient. The compose very well and are easy to reason about.

ZIO contains number of data types that help to run concurrent and asynchronous programs. Most important are:

- Fiber – fiber models an IO that started running, it is more lightweight than threads

- ZIO – it is a value that models an effectful program, it might fail or succeed

- Promise – it is a model of a variable that may be set a single time, and awaited on by many fibers

- Schedule – schedule is a model of a recurring schedule, which can be used for repeating successful IO values, or retrying failed IO values

The main building block of the ZIO is the functional effect:

IO[E, A]

IO[E, A] is an immutable data type which describes the effectful program. The program may fail with error of type E or succeed with value of type A.

I would like to dive a bit deeper into Fiber data type. Fiber is base building block of the ZIO concurrency model. It is significantly different when you compare it to the to thread based concurrency model. In the thread based concurrency model every thread is mapped to the OS threads. Whereas the ZIO Fiber is more like a green thread. Green threads are threads that are scheduled by a runtime library or virtual machine instead of natively by the underlying operating system. They make it possible to emulate multithreaded environment without relying on the operating system capabilities. Green threads in most of the cases outperform the native threads but there are cases when the native threads are better.

Fibers are really powerful and nicely separated. Every fiber has its own stack, interpreter and that is how it executes the IO program.

There is also one interesting thing about Fibers. They can be garbage collected. Let’s imaging a Fiber that runs infinitely. If it does not do any work at a particular time and there is no way to reach it from other components then it can be actually garbage collected. No memory leaks. Threads need to be shutdown and managed carefully in such case.

Let’s see how we can use Fibers. Imagine a situation when we have two functions:

- validate – it does complex data validation

- processData – it does complex and time consuming data processing

We would like to start the validation and processing data at the same time. If the validation is successful then the processing continues. If the validation fails then we stop processing. Implementing it with ZIO and Fibers is pretty straightforward:

val result = for {

processDataFiber <- processData(data).fork

validateDataFiber <- validateData(data).fork

isValid <- validateDataFiber.join

_ <- if (!isValid) processDataFiber.interrupt

else IO.unit

processingResult <- processDataFiber.join

} yield processingResult

It starts processing and validating the data in lines (2) and (3). fork function returns an effect that forks this effect into a separate fiber. This fiber is returned immediately. In line (4) the fiber is joined, which suspends the joining fiber until the result of the fiber has been determined. If the validation result is false then we stop the processData fiber immediately, else we continue. Then in line (7) we wait for finishing the data processing.

This looks like the code that should run immediately, the same as similar code using Scala Futures. However, this is not the case for ZIO. In the above program we describe the functionality but we do not say how to run it. Running part is defined as late as possible. This feature also makes it pure functional. We can create a runtime and pass it around to run the effects:

val runtime = new DefaultRuntime {}

runtime.unsafeRun(DataProcessor.process())

Having the basic knowledge, we can write benchmarks we wrote with Scala Future before using the ZIO library.

The code can be found here:

Here are the results:

[info] Result "com.dblazejewski.benchmark.zio.ZioMathTanBenchmark.zioForComprehensionTestCase": [info] 1090.656 ±(99.9%) 79.370 ns/op [Average] [info] (min, avg, max) = (1007.467, 1090.656, 1164.134), stdev = 52.498 [info] CI (99.9%): [1011.286, 1170.026] (assumes normal distribution)

The performance difference is enormous. It only confirms what was already published by John A. De Goes on Twitter some time ago:

I am not going to dive in this post into the details of using ZIO library. There are already great resources provided:

- the ZIO website: https://zio.dev/

- blog post by Adam Warski

- A Tour of ZIO by John A. De Goes

- Functional Scala - Modern Data Driven Applications with ZIO Streams by Itamar Ravid

Conclusion

Scala Future was a very good improvement of poor Java Future. When jumping from Java to Scala it was so huge difference in "developer-friendliness" that every come back to Java was a real pain.

However the more you dive into functional programming the more limitations of the Scala Future you see. Lack of referential transparency, accidental sequentiality, ExecutionContext are only a few of those limitations.

ZIO is still in the early stage but I am sure it will really shine in the future. Also the trend that is happening in the Scala ecosystem these days where there is shift from the early object-functional programming to current purely functional programming will also favour purely functional solutions like ZIO.